Table of contents

Docker

Introduction:

Docker has revolutionized the way applications are deployed and managed. It provides a lightweight and portable environment that allows developers to package their applications and run them consistently across different platforms. In this guide, we'll explore Docker in simple terms, demystifying its key concepts and explaining why it's essential for modern software development and deployment. Whether you're a developer or an IT professional, this guide will help you grasp the fundamentals of Docker and its benefits.

Section 1: What is Docker?

Explaining the concept of containerization

Highlighting the advantages of Docker over traditional virtualization

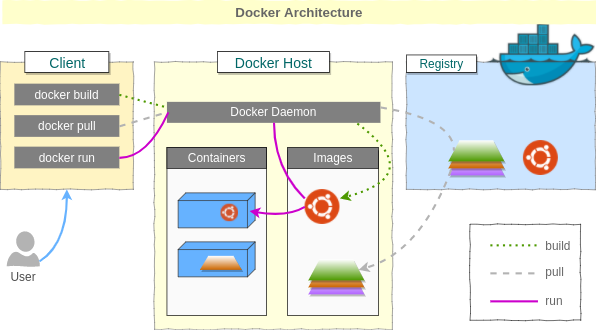

Describing Docker's architecture and components (Docker Engine, Docker images, and containers)

Containerization: Docker is a containerization platform that allows applications to run in isolated environments called containers. Containers encapsulate the application code, dependencies, and configurations, ensuring consistency and portability.

Advantages over virtualization: Docker offers benefits like efficient resource utilization, faster startup times, and greater scalability compared to traditional virtualization methods, enabling efficient application deployment and management.

Docker Architecture: Docker consists of three main components: Docker Engine, responsible for building and running containers; Docker images, lightweight, standalone executable packages containing everything needed to run an application; and Docker containers, the running instances of Docker images.

Section 2: Key Docker Concepts

Dockerfile: Understanding how to create a Docker image using a Dockerfile

Docker Image: Discussing the role of Docker images and how they represent applications

Docker Container: Exploring containers as lightweight and isolated runtime instances of Docker images

Docker Registry: Introducing the concept of registries and their role in storing and distributing Docker images

Dockerfile: A text file that contains instructions to build a Docker image. It defines the application's environment, dependencies, and commands needed to create the image.

Docker Image: A lightweight, standalone package that includes the application code, dependencies, and runtime environment. Images are immutable and can be easily shared and deployed across different environments.

Docker Container: A runtime instance of a Docker image. Containers are isolated environments where the application can run. They are portable, scalable, and can be started, stopped, and managed independently.

Docker Registry: A centralized repository that stores Docker images. Registries facilitate sharing and distribution of images across teams and environments, allowing easy access and retrieval of images for deployment.

Section 3: Getting Started with Docker

Installing Docker on different operating systems (Windows, macOS, Linux)

Running your first Docker container

Basic Docker commands for managing containers (starting, stopping, listing)

Installing Docker: Install Docker on Windows, macOS, or Linux to get started with Docker. Docker provides installation packages and guides for each operating system, making it accessible to developers on different platforms.

Running your first Docker container: Use the "docker run" command to run a container based on a specific Docker image. This command pulls the image from a registry (if not already available locally) and starts the container, allowing you to run your application within the isolated environment.

Basic Docker commands for managing containers: Use commands like "docker start," "docker stop," and "docker ps" to manage containers. "docker start" starts a stopped container, "docker stop" stops a running container, and "docker ps" lists the currently running containers on your system.

Section 4: Building and Managing Docker Images

Building Docker images using Dockerfiles

Layer caching and incremental builds for faster image creation

Best practices for creating efficient and optimized Docker images

Pushing and pulling Docker images from registries

Building Docker images: Use Dockerfiles to define the steps required to build a Docker image. Dockerfiles specify the base image, dependencies, configurations, and commands needed to create the image.

Layer caching and incremental builds: Docker uses layer caching to speed up image creation. Layers that haven't changed are reused, reducing build time. Incremental builds only rebuild layers affected by changes, optimizing the build process.

Best practices for efficient images: Minimize image size, reduce the number of layers, use appropriate base images, and leverage multi-stage builds. Clean up unnecessary files and dependencies to create lean and optimized Docker images.

Pushing and pulling images: Use Docker commands like "docker push" and "docker pull" to push your images to and pull them from Docker registries. Registries enable easy sharing and distribution of Docker images across teams and environments.

Section 5: Networking and Data Management with Docker

Docker networking: Bridging containers and exposing ports

Managing data in Docker containers: volumes and bind mounts

Docker Compose: Orchestrating multi-container applications with ease

Docker networking: Docker enables networking between containers using network bridges. Containers can communicate with each other using IP addresses or DNS names. Ports can be exposed to allow external access to containerized applications.

Managing data in Docker containers: Docker provides two approaches for data management - volumes and bind mounts. Volumes are managed by Docker and persist data even if the container is deleted. Bind mounts link container paths to host paths, allowing data to be shared between the container and host system.

Docker Compose: Docker Compose simplifies the orchestration of multi-container applications. It allows for defining and managing multiple containers as a single application stack. Compose files specify the services, networks, volumes, and dependencies required, enabling easy deployment and scaling of complex applications.

Section 6: Docker in Production Environments

Deploying Docker containers to production servers

Container orchestration tools: Kubernetes and Docker Swarm

Scaling applications with Docker

Monitoring and logging Docker containers

Deploying Docker containers: Docker containers can be deployed to production servers using various methods like manual deployment, continuous integration/continuous deployment (CI/CD) pipelines, or container orchestration tools.

Container orchestration tools: Kubernetes and Docker Swarm are popular container orchestration platforms. They automate container deployment, scaling, and management, ensuring high availability, load balancing, and fault tolerance in production environments.

Scaling applications with Docker: Docker enables horizontal scaling by replicating containers across multiple hosts and distributing the application's load. Scaling can be done manually or automatically based on predefined rules or metrics, ensuring optimal resource utilization and performance.

Monitoring and logging Docker containers: Monitoring tools like Prometheus, Grafana, and logging solutions like ELK Stack (Elasticsearch, Logstash, and Kibana) can be used to monitor and gather metrics from Docker containers. These tools help track performance, identify issues, and ensure efficient management of containerized applications in production environments.

Day 16 Task:

Use the

docker runcommand to start a new container and interact with it through the command line. [Hint: docker run hello-world]Use the

docker inspectcommand to view detailed information about a container or image.Use the

docker portcommand to list the port mappings for a container.Use the

docker statscommand to view resource usage statistics for one or more containers.Use the

docker topcommand to view the processes running inside a container.Use the

docker savecommand to save an image to a tar archive.Use the

docker loadcommand to load an image from a tar archive.

Steps to install Docker

sudo apt-get update

sudo usermod -aG docker $USER

The commandsudo usermod -aG docker $USERis used to add the current user to the "docker" group to run Docker commands without using sudo. This allows the user to manage Docker containers and images without needing root privileges for every Docker command.sudo reboot

- Use the

docker runcommand to start a new container and interact with it through the command line. [Hint: docker run hello-world]

- Use the

docker inspectcommand to view detailed information about a container or image.

- Use the

docker portcommand to list the port mappings for a container.

- Use the

docker statscommand to view resource usage statistics for one or more containers.

- Use the

docker topcommand to view the processes running inside a container.

- Use the

docker savecommand to save an image to a tar archive.

- Use the

docker loadcommand to load an image from a tar archive.

Comprehensive Docker blog for revision and interview preparation. Covers all aspects. Helpful resource.

Thank you for reading. I hope you were able to understand and learn something new from my blog.

Happy Learning!

Please follow me on Hashnode and do connect with me on LinkedIn ArnavSingh